AIRO (AI Risk Ontology) is an ontology for expressing risk of AI systems based on the requirements of the AI Act, ISO/IEC 23894 on AI risk management and and ISO 31000 series of standards. AIRO assists stakeholders in determining "high-risk" AI systems, maintaining and documenting risk information, performing impact assessments, and achieving conformity with AI regulations.

The AI Act aims to avoid the harmful impacts of AI on critical areas such as health, safety, and fundamental rights by setting down obligations which are proportionate to the type and severity of risk posed by the system. It distinguishes specific areas and the application of AI within them that constitutes "high-risk" and has additional obligations (Art. 6) that require providers of high-risk AI systems to identify and document risks associated with AI systems at all stages of development and deployment (Art. 9).

Existing risk management practises consist of maintaining, querying, and sharing information associated with risks for compliance checking, demonstrating accountability, and building trust. Maintaining information about risks for AI systems is a complex task given the rapid pace with which the field progresses, as well as the complexities involved in its lifecycle and data governance processes where several entities are involved and need to share information for risk assessments. In turn, investigations based on this information are difficult to perform which makes their auditing and assessment of compliance a challenge for organisations and authorities. To address some of these issues, the AI Act relies on creation of standards that alleviate some of the compliance related obligations and tasks (Art. 40).

We propose an ontology-based approach regarding the information required to be maintained and used for the AI Act’s compliance and conformance by utilising open data specifications for documenting risks and performing AI risk assessment activities. Such data specifications utilise interoperable machine-readable formats to enable automation in information management, querying, and verification for self-assessment and third party conformity assessments. Additionally, they enable automated tools for supporting AI risk management that can both import and export information meant to be shared with stakeholders - such as AI users, providers, and authorities.

AIRO is an ontology for expressing risk of harm associated with AI systems based on the EU AI Act and the key standards in the ISO 31000 series including 31000:2018 Risk management – Guidelines and ISO 31073:2022 Risk management — Vocabulary. AIRO assists with expressing risk of AI systems as per the requirements of the AI Act, in a machine-readable, formal, and interoperable manner through use of semantic web technologies.The purpose of AIRO is to express AI risks to enable organisations to represent their AI systems and the associated AI risks and determine whether their AI systems are "high-risk" as per Annex III of the AI Act and

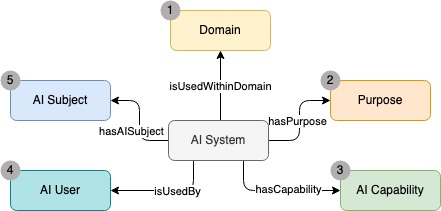

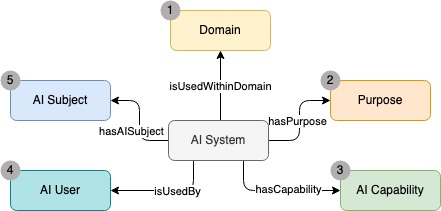

We analysed the requirements of the AI Act, in particular the list of high-risk systems in Annex III, and identified the specific concepts whose combinations determine whether the AI system is considered high-risk.These are listed in Table 1 in the form of: competency questions, concepts, and relation with AI system.

| ID | Competency Question | Concept | Relation |

|---|---|---|---|

| 1 | In which domain is the AI system used? | Domain |

isAppliedWithinDomain |

| 2 | What is the purpose of the AI system? | Purpose |

hasPurpose |

| 3 | What is the capability of the AI system? | AICapability |

hasCapability |

| 4 | Who is the deployer of the AI system? | AIDeployer |

isDeployedBy |

| 5 | Who is the AI subject? | AISubject |

hasAISubject |

| airo | <https://w3id.org/airo#> |

| dpv | <https://w3id.org/dpv#> |

| dqv | <http://www.w3.org/ns/dqv#> |

| dcat | <https://www.w3.org/TR/vocab-dcat-3/> |

| owl | <http://www.w3.org/2002/07/owl> |

| rdf | <http://www.w3.org/1999/02/22-rdf-syntax-ns> |

| terms | <http://purl.org/dc/terms> |

| xml | <http://www.w3.org/XML/1998/namespace> |

| xsd | <http://www.w3.org/2001/XMLSchema> |

| rdfs | <http://www.w3.org/2000/01/rdf-schema> |

| prov | <http://www.w3.org/ns/prov> |

| dc | <http://purl.org/dc/elements/1.1> |

| IRI | https://w3id.org/airo#AISystem |

| Term | AISystem |

| Label | AI System |

| Definition | A machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. |

| Source | EU AI Act, Article 3(1) |

| IRI | https://w3id.org/airo#AICapability |

| Term | AICapability |

| Label | AI Capability |

| Definition | The capability of an AI system that enables realisation of the system's purposes. |

| IRI | https://w3id.org/airo#AITechnique |

| Term | AITechnique |

| Label | AI Technique |

| Definition | Approaches or techniques used in development of a system. |

| IRI | https://w3id.org/airo#Modality |

| Term | Modality |

| Label | Modality |

| Definition | The form in which the system exists or is represented. |

| IRI | https://w3id.org/airo#AILifecyclePhase |

| Term | AILifecyclePhase |

| Label | AI Lifecycle Phase |

| Definition | A Phase of AI lifecycle which indicates evolution of the system from conception through retirement. |

| IRI | https://w3id.org/airo#AIComponent |

| Term | AIComponent |

| Label | AI Component |

| Definition | Component (element) of an AI system |

| IRI | https://w3id.org/airo#AIModel |

| Term | AIModel |

| Label | AI Model |

| Definition | Mathematical construct that generates an inference or prediction, based on input data. |

| SubClassOf | airo:AIComponent |

| Source | ISO/IEC TR 24028, 3.24 |

| IRI | https://w3id.org/airo#GPAIModel |

| Term | GPAIModel |

| Label | General Purpose AI Model |

| Definition | An AI model, including where such an AI model is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications. |

| SubClassOf | airo:AIModel |

| Source | EU AI Act, Article 3(63) |

| IRI | https://w3id.org/airo#Data |

| Term | Data |

| Label | Data |

| Definition | Refers to dataset and the broad concept of data. |

| IRI | https://w3id.org/airo#HardwarePlatform |

| Term | HardwarePlatform |

| Label | Hardware Platform |

| Definition | The hardware on which the system is run. |

| IRI | https://w3id.org/airo#Input |

| Term | Input |

| Label | Input |

| Definition | Input for which the system or component generates output. |

| IRI | https://w3id.org/airo#Output |

| Term | Output |

| Label | Output |

| Definition | Output generated by the system. |

| IRI | https://w3id.org/airo#AIQuality |

| Term | AIQuality |

| Label | AI Quality |

| Definition | Refers to quality of an AI system or component. |

| IRI | https://w3id.org/airo#Version |

| Term | Version |

| Label | Version |

| Definition | A unique number or name that is assigned to a unique state of an AI system |

| IRI | https://w3id.org/airo#License |

| Term | License |

| Label | License |

| Definition | Represents a copyright license. |

| IRI | https://w3id.org/airo#Domain |

| Term | Domain |

| Label | Domain |

| Definition | An area, sector, or industry that is associated with economic activities. |

| IRI | https://w3id.org/airo#Purpose |

| Term | Purpose |

| Label | Purpose |

| Definition | The end goal for which an entity is used or an action is taken. |

| IRI | https://w3id.org/airo#LocalityOfUse |

| Term | LocalityOfUse |

| Label | Locality Of Use |

| Definition | The area, e.g. facility or institution, in which an entity is used. |

| IRI | https://w3id.org/airo#LocalityOfUse |

| Term | ModeOfOutputControllability |

| Label | Mode Of Output Controllability |

| Definition | The level of control over AI outputs exercised by humans. |

| IRI | https://w3id.org/airo#AutomationLevel |

| Term | AutomationLevel |

| Label | AutomationLevel |

| Definition | Refers to the degree of automation under which an AI system operates. |

| IRI | https://w3id.org/airo#AutomationLevel |

| Term | HumanInvolvement |

| Label | Human Involvement |

| Definition | Indicates involvement of humans. |

| IRI | https://w3id.org/airo#RiskConcept |

| Term | RiskConcept |

| Label | Risk Concept |

| Definition | An umbrella term for refering to risk, risk source, consequence and impact. |

| IRI | https://w3id.org/airo#RiskSource |

| Term | RiskSource |

| Label | Risk Source |

| Definition | An element that has the potential give rise to a risk |

| SubClassOf | airo:RiskConcept |

| Source | ISO 31073:2022, 3.3.10 |

| IRI | https://w3id.org/airo#Risk |

| Term | Risk |

| Label | Risk |

| Definition | the state of uncertainty associated with an AI system, that has the potential to cause harms and is expressed in terms of risk sources, consequences, impacts, likelihood, and severity |

| SubClassOf | airo:RiskConcept |

| IRI | https://w3id.org/airo#Hazard |

| Term | Hazard |

| Label | Hazard |

| Definition | Source of potential harm. |

| SubClassOf | airo:RiskSource |

| Source | ISO 31073:2022, 3.3.12 |

| IRI | https://w3id.org/airo#Threat |

| Term | Threat |

| Label | Threat |

| Definition | Potential source of danger, harm, or other undesirable outcome. |

| SubClassOf | airo:RiskSource |

| Source | ISO 31073:2022, 3.3.13 |

| IRI | https://w3id.org/airo#Misuse |

| Term | Misuse |

| Label | Misuse |

| Definition | The use of an AI system or component in a way that is not in accordance with its intended purpose. |

| SubClassOf | airo:Risk |

| IRI | https://w3id.org/airo#Consequence |

| Term | Consequence |

| Label | Consequence |

| Definition | Direct outcome of risk affecting objectives. |

| SubClassOf | airo:RiskConcept |

| Source | ISO 31000, 3.6 [with modifications], ISO 31073:2022, 3.3.18 [with modifications] |

| IRI | https://w3id.org/airo#Impact |

| Term | Impact |

| Label | Impact |

| Definition | The outcome of a consequence on individuals, groups, society, environment, etc. |

| SubClassOf | airo:Consequence |

| IRI | https://w3id.org/airo#AreaOfImpact |

| Term | AreaOfImpact |

| Label | Area Of Impact |

| Definition | Areas or aspects impacted by an AI system, e.g. physical health, right to privacy. |

| IRI | https://w3id.org/airo#Vulnerability |

| Term | Vulnerability |

| Label | Vulnerability |

| Definition | Refers to properties of an entity, e.g. AI system or AI component, resulting in susceptibility to a risk source. |

| Source | ISO 31073:2022, 3.3.21 with modifications |

| IRI | https://w3id.org/airo#RiskControl |

| Term | RiskControl |

| Label | Risk Control |

| Definition | A measure that maintains and/or modifies risk (and risk concepts) |

| Source | ISO/IEC 31073, 3.3.33 (with expansion of scope) |

| IRI | https://w3id.org/airo#Likelihood |

| Term | Likelihood |

| Label | Likelihood |

| Definition | Chance of something happening. |

| Source | ISO 31073:2022, 3.3.16 |

| IRI | https://w3id.org/airo#Severity |

| Term | Severity |

| Label | Severity |

| Definition | Indicates the level of severity. |

| IRI | https://w3id.org/airo#Stakeholder |

| Term | Stakeholder |

| Label | Stakeholder |

| Definition | Represents any individual, group or organization that can affect, be affected by or perceive itself to be affected by a decision or activity. |

| Source | ISO/IEC 22989, 3.5.13 |

| IRI | https://w3id.org/airo#AIOperator |

| Term | AIOperator |

| Label | AI Operator |

| Definition | Refers to a provider, product manufacturer, deployer, authorised representative, importer or distributor. |

| SubClassOf | airo:Stakeholder |

| Source | EU AI Act, Article 3(8) |

| IRI | https://w3id.org/airo#AIProvider |

| Term | AIProvider |

| Label | AI Provider |

| Definition | A natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that has an AI system or a general-purpose AI model developed and places it on the market or puts the AI system into service under its own name or trademark, whether for payment or free of charge. |

| SubClassOf | airo:AIOperator |

| Source | EU AI Act, Article 3(3) |

| IRI | https://w3id.org/airo#AIDeployer |

| Term | AIDeployer |

| Label | AI Deployer |

| Definition | Any natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity. |

| SubClassOf | airo:AIOperator |

| Source | EU AI Act, Article 3(4) |

| IRI | https://w3id.org/airo#AIDeveloper |

| Term | AIDeveloper |

| Label | AI Developer |

| Definition | An organisation or entity that is concerned with the development of AI services and products. |

| SubClassOf | airo:Stakeholder |

| Source | ISO/IEC 22989, 5.19.3.2 |

| IRI | https://w3id.org/airo#AIUser |

| Term | AIUser |

| Label | AI User |

| Definition | Individual or group that interacts with a system. |

| SubClassOf | airo:Stakeholder |

| IRI | https://w3id.org/airo#AISubject |

| Term | AISubject |

| Label | AI Subject |

| Definition | An entity that is subject to or impacted by the use of AI. |

| SubClassOf | airo:Stakeholder |

| IRI | https://w3id.org/airo#Frequency |

| Term | Frequency |

| Label | Frequency |

| Definition | Number of events or outcomes per defined unit of time. |

| Source | ISO 31073:2022, 3.3.20 |

| IRI | https://w3id.org/airo#Documentation |

| Term | Documentation |

| Label | Documentation |

| Definition | Refers to documeneted information. |

| IRI | https://w3id.org/airo#Standard |

| Term | Standard |

| Label | Standard |

| Definition | A technical specification, adopted by a recognised standardisation body, for repeated or continuous application, with which compliance is not compulsory. |

| Source | Regulation (EU) No 1025/2012 on European standardisation |

| IRI | https://w3id.org/airo#Regulation |

| Term | Regulation |

| Label | Regulation |

| Definition | A binding legislative act. |

| IRI | https://w3id.org/airo#CodeOfConduct |

| Term | CodeOfConduct |

| Label | Code Of Conduct |

| Definition | A non-binding set of norms and responsibilities. |

| IRI | https://w3id.org/airo#Change |

| Term | Change |

| Label | Change |

| Definition | An alternation in an entity. |

| IRI | https://www.w3.org/ns/dcat#Dataset |

| IRI | https://w3id.org/dpv#Data |

| IRI | https://w3id.org/dpv#SensitiveData |

| IRI | https://w3id.org/dpv#DataSource |

| IRI | https://w3id.org/dpv#DataSubject |

| IRI | https://w3id.org/dpv#LegalBasis |

| IRI | https://w3id.org/dpv#Processing |

| IRI | http://www.w3.org/ns/dqv#Metric |

| IRI | http://www.w3.org/ns/dqv#Dimension |

| IRI | http://www.w3.org/ns/dqv#QualityMeasurement |

| IRI | https://w3id.org/airo#isAppliedWithinDomain |

| Term | isAppliedWithinDomain |

| Label | is applied within domain |

| Definition | Specifies the domain an AI system is used within |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:Domain |

| IRI | https://w3id.org/airo#hasPurpose |

| Term | hasPurpose |

| Label | has purpose |

| Definition | Indicates the purpose of an entity, e.g. AI system, components. |

| Range | airo:Purpose |

| IRI | https://w3id.org/airo#isUsedWithinLocality |

| Term | isUsedWithinLocality |

| Label | is used within locality |

| Definition | Indicates the locality of use. |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:HardwarePlatform |

| IRI | https://w3id.org/airo#hasAISubject |

| Term | hasAISubject |

| Label | has AI subject |

| Definition | Indicates the subjects of an AI system |

| Domain | airo:AISystem |

| Range | airo:AISubject |

| IRI | https://w3id.org/airo#hasCapability |

| Term | hasCapability |

| Label | has capability |

| Definition | Specifies capabilities implemented within an AI system to materialise its purposes. |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:AICapabiliy |

| IRI | https://w3id.org/airo#usesTechnique |

| Term | usesTechnique |

| Label | uses technique |

| Definition | Indicates the AI techniques used in an AI system or component |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:AITechnique |

| IRI | https://w3id.org/airo#producesOutput |

| Term | producesOutput |

| Label | produces output |

| Definition | Specifies an output generated by an AI system or component. |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:Output |

| IRI | https://w3id.org/airo#hasComponent |

| Term | hasComponent |

| Label | has component |

| Definition | Indicates a component incorporating an AI system or another component. |

| Range | airo:AIComponent |

| IRI | https://w3id.org/airo#hasModel |

| Term | hasModel |

| Label | has model |

| Definition | Indicates machine learning models used with a system or component. |

| Range | airo:AIModel |

| SubPropertyOf | airo:hasAIComponent |

| IRI | https://w3id.org/airo#hasTrainingData |

| Term | hasTrainingData |

| Label | has training data |

| Definition | Indicates datasets used in training. |

| Range | airo:Data |

| SubPropertyOf | airo:hasAIComponent |

| IRI | https://w3id.org/airo#hasTestingData |

| Term | hasTestingData |

| Label | has testing data |

| Definition | Indicates datasets used for testing. |

| Range | airo:Data |

| SubPropertyOf | airo:hasAIComponent |

| IRI | https://w3id.org/airo#hasValidationData |

| Term | hasValidationData |

| Label | has validation data |

| Definition | Indicates datasets used for validation. |

| Range | airo:Data |

| SubPropertyOf | airo:hasAIComponent |

| IRI | https://w3id.org/airo#hasInput |

| Term | hasInput |

| Label | has input |

| Domain | airo:AISystem, airo:AIComponent |

| Definition | Indicates the input an entity needs to process to generate output. |

| Range | airo:Input |

| IRI | https://w3id.org/airo#runsOnHardware |

| Term | runsOnHardware |

| Label | runs on hardware |

| Definition | Indicates the hardware on which the system runs. |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:HardwarePlatform |

| IRI | https://w3id.org/airo#hasStakeholder |

| Term | hasStakeholder |

| Label | has stakeholder |

| Definition | Indicates stakeholders of an AI system or component. |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:Stakeholder |

| IRI | https://w3id.org/airo#isProvidedBy |

| Term | isProvidedBy |

| Label | is provided by |

| Definition | Indicates provider of an AI system or component. |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:AIProvider |

| IRI | https://w3id.org/airo#isDeployedBy |

| Term | isDeployedBy |

| Label | is deployed by |

| Definition | Indicates the deployer of an AI system or component. |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:AIDeployer |

| IRI | https://w3id.org/airo#isDevelopedBy |

| Term | isDevelopedBy |

| Label | is developed by |

| Definition | Indicates the developer of an AI system or component. |

| Domain | airo:AISystem, airo:AIComponent |

| Range | airo:AIDeveloper |

| IRI | https://w3id.org/airo#isUsedBy |

| Term | isUsedBy |

| Label | is used by |

| Definition | Indicates user of an AI system |

| Domain | airo:AISystem |

| Range | airo:AIUser |

| IRI | https://w3id.org/airo#hasModality |

| Term | hasModality |

| Label | has modality |

| Definition | Indicates the modality in which an entity exists. |

| Range | airo:Modality |

| IRI | https://w3id.org/airo#hasLicense |

| Term | hasLicense |

| Label | has license |

| Definition | Indicates licenses associated with a resource. |

| Range | airo:License |

| IRI | https://w3id.org/airo#hasVersion |

| Term | hasVersion |

| Label | has version |

| Definition | Indicates the version of an entity, e.g. AI system. |

| Range | airo:Version |

| IRI | https://w3id.org/airo#hasSeverity |

| Term | hasSeverity |

| Label | has severity |

| Definition | Indicates severity of a consequence or an impact. |

| Domain | airo:Consequence, airo:Impact |

| Range | airo:Severity |

| IRI | https://w3id.org/airo#hasLifecyclePhase |

| Term | hasLifecyclePhase |

| Label | has lifecycle phase |

| Definition | Indicates the lifecycle phase of the AI system or component. |

| Range | airo:AISystem, airo:AIComponent |

| Range | airo:AILifecyclePhase |

| IRI | https://w3id.org/airo#hasDocumentation |

| Term | hasDocumentation |

| Label | has documentation |

| Definition | Indicates documentation associated with an entity, e.g. AI model, AI system. |

| Range | airo:Documentation |

| IRI | https://w3id.org/airo#hasAutomationLevel |

| Term | hasAutomationLevel |

| Label | has automation level |

| Definition | Indicates the system's level of automation. |

| Domain | airo:AISystem |

| Range | airo:AutomationLevel |

| IRI | https://w3id.org/airo#hasControlOverAIOutput |

| Term | hasControlOverAIOutput |

| Label | has control over AI output |

| Definition | Indicates the level of control a stakeholder has over outputs produced by an AI system. |

| Domain | airo:Stakeholder |

| Range | airo:ModeOfOutputControllability |

| IRI | https://w3id.org/airo#hasHumanInvolvement |

| Term | hasHumanInvolvement |

| Label | has human involvement |

| Definition | Indicates the type involvement of a human. |

| Domain | airo:Stakeholder |

| Range | airo:HumanInvolvement |

| IRI | https://w3id.org/airo#hasRisk |

| Term | hasRisk |

| Label | has risk |

| Definition | Indicates risks associated with an AI system, an AI component, etc. |

| Range | airo:Risk |

| IRI | https://w3id.org/airo#isRiskSourceFor |

| Term | isRiskSourceFor |

| Label | is risk source for |

| Definition | Specifies risks caused by materialisation of a risk source. |

| Domain | airo:RiskSource |

| Range | airo:Risk |

| IRI | https://w3id.org/airo#hasConsequence |

| Term | hasConsequence |

| Label | has consequence |

| Definition | Specifies consequences caused by materialisation of a risk. |

| Domain | airo:Risk |

| Range | airo:Consequence |

| IRI | https://w3id.org/airo#hasImpact |

| Term | hasImpact |

| Label | has impact |

| Definition | Specifies the impact caused by materialisation of a consequence. |

| Domain | airo:Consequence |

| Range | airo:Impact |

| IRI | https://w3id.org/airo#hasImpactOnArea |

| Term | hasImpactOnArea |

| Label | has impact on area |

| Definition | Specifies the area (e.g. physical health) that is impacted by an AI system or component. |

| Domain | airo:Impact |

| Range | airo:AreaOfImpact |

| IRI | https://w3id.org/airo#hasImpactOnEntity |

| Term | hasImpactOnEntity |

| Label | has impact on entity |

| Definition | Indicates entities, e.g. environment, impacted by AI systems. |

| Domain | airo:Impact |

| IRI | https://w3id.org/airo#hasImpactOnStakeholder |

| Term | hasImpactOnStakeholder |

| Label | has impact on stakeholder |

| Definition | Indicates the people that are (negatively) impacted by an AI system or component. |

| Domain | airo:Impact |

| Range | airo:takeholder |

| Subproperty of | hasImpactOnEntity |

| IRI | https://w3id.org/airo#hasLikelihood |

| Term | hasLikelihood |

| Label | has likelihood |

| Definition | Indicates the probability of occurrence of risks, risk sources, consequences, or impacts. |

| Domain | airo:RiskConcept |

| Range | airo:Likelihood |

| IRI | https://w3id.org/airo#hasRiskControl |

| Term | hasRiskControl |

| Label | has risk control |

| Definition | Indicates the control measures associated with a system or component to modify risks. |

| Range | airo:RiskControl |

| IRI | https://w3id.org/airo#modifiesRiskConcept |

| Term | modifiesRiskConcept |

| Label | modifies risk concept |

| Definition | Indicates the control employed to modify risks, risk sources, consequences, and impacts. |

| Domain | airo:RiskControl |

| Range | airo:RiskConcept |

| IRI | https://w3id.org/airo#detectsRiskConcept |

| Term | detectsRiskConcept |

| Label | detects risk concept |

| Definition | Indicates the control used for detecting risks, risk sources, consequences, and impacts. |

| Domain | airo:RiskControl |

| Range | airo:RiskConcept |

| SubPropertyOf | airo:modifiesRiskConcept |

| IRI | https://w3id.org/airo#eliminatesRiskConcept |

| Term | eliminatesRiskConcept |

| Label | eliminates risk concept |

| Definition | Indicates the control used for eliminating risks, risk sources, consequences, and impacts. |

| Domain | airo:RiskControl |

| Range | airo:RiskConcept |

| SubPropertyOf | airo:modifiesRiskConcept |

| IRI | https://w3id.org/airo#mitigatesRiskConcept |

| Term | mitigatesRiskConcept |

| Label | mitigates risk concept |

| Definition | Indicates the control used for mitigating risks, risk sources, consequences, and impacts. |

| Domain | airo:RiskControl |

| Range | airo:RiskConcept |

| SubPropertyOf | modifiesRiskConcept |

| IRI | https://w3id.org/airo#isFollowedByControl |

| Term | isFollowedByControl |

| Label | is followed by control |

| Definition | Specifies the order of controls. |

| Domain | airo:RiskControl |

| Range | airo:RiskControl |

| IRI | https://w3id.org/airo#isPartOfControl |

| Term | isPartOfControl |

| Label | is part of control |

| Definition | Specifies composition of controls |

| Domain | airo:RiskControl |

| Range | airo:RiskControl |

| IRI | https://w3id.org/airo#hasVulnerability |

| Term | hasVulnerability |

| Label | has vulnerability |

| Definition | Indicates vulnerabilities associated with an entity, e.g. AI system and its components. |

| Range | airo:Vulnerability |

| IRI | https://w3id.org/airo#exploitsVulnerability |

| Term | exploitsVulnerability |

| Label | exploits vulnerability |

| Definition | Indicates vulnerabilities exploited by a risk source. |

| Domain | airo:RiskSource |

| Range | airo:Vulnerability |

| IRI | https://w3id.org/airo#hasAIUser |

| Term | hasAIUser |

| Label | has AI user |

| Definition | Indicate the end-user of an AI system. |

| Domain | airo:AISystem |

| Range | airo:AIUser |

| IRI | https://w3id.org/airo#compliesWithRegulation |

| Term | compliesWithRegulation |

| Label | complies with regulation |

| Definition | Indicates compliance with a specific regulation. |

| Range | airo:Regulation |

| IRI | https://w3id.org/airo#conformsToStandard |

| Term | conformsToStandard |

| Label | conforms to standard |

| Definition | Indicates conformance of an entity to a standard. |

| Range | airo:Standard |

| IRI | https://w3id.org/airo#followsCodeOfConduct |

| Term | followsCodeOfConduct |

| Label | follows code of conduct |

| Definition | Indicates the code of conduct followed. |

| Range | airo:CodeOfConduct |

| IRI | https://w3id.org/airo#hasChangedEntity |

| Term | hasChangedEntity |

| Label | has changed entity |

| Definition | Indicates the entity that is being changed. |

| Domain | airo:Change |

| IRI | https://w3id.org/airo#hasPreDeterminedChange |

| Term | hasPreDeterminedChange |

| Label | has pre-determined change |

| Definition | Indicates the changes that are planned to be applied to the system, components, or context of use. |

| Range | airo:Change |

| IRI | https://w3id.org/airo#hasFrequency |

| Term | hasFrequency |

| Label | has frequency |

| Definition | Indicates frequency of an event, e.g. change. |

| Range | airo:Frequency |

| IRI | https://www.w3.org/TR/vocab-dqv/#dqv:hasQualityMeasurement |

| IRI | https://www.w3.org/TR/vocab-dqv/#dqv:isMeasurementOf |

| IRI | https://www.w3.org/TR/vocab-dqv/#dqv:inDimension |

| IRI | https://www.w3.org/TR/vocab-dqv/#dqv:expectedDataType |

| IRI | https://w3id.org/dpv#hasData |

| IRI | https://w3id.org/dpv#hasDataSource |

| IRI | https://w3id.org/dpv#hasDataSubject |

| IRI | https://w3id.org/dpv#hasLegalBasis |

| IRI | https://w3id.org/dpv#hasProcessing |

This project has received funding from the European Union's Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 813497, as part of the ADAPT SFI Centre for Digital Media Technology is funded by Science Foundation Ireland through the SFI Research Centres Programme and is co-funded under the European Regional Development Fund (ERDF) through Grant#13/RC/2106_P2.